In the rapidly evolving field of medical imaging, the impact of image bit depth on diagnostic precision is a critical factor yet often overlooked. This article provides a comprehensive analysis of 8-bit versus 16-bit image processing in the context of medical diagnostics, using DICOM images from the CMB-AML dataset focused on Acute Myeloid Leukemia (AML) detection. Employing a systematic approach, we compare the two bit depths through a series of image transformations, assessing their influence on image quality, detail resolution, and diagnostic accuracy. Our findings highlight the nuanced differences between 8-bit and 16-bit processing, offering insights into their respective advantages and limitations in medical imaging applications. This investigation aims to guide practitioners in selecting the optimal bit depth for enhanced diagnostic outcomes in medical imaging, thereby contributing to improved patient care.

Zoe Amin-Akhlaghi, ZAMSTEC Academy of Science and Technology, Austria

Abstract

In the rapidly evolving field of medical imaging, the impact of image bit depth on diagnostic precision is a critical factor yet often overlooked. This article provides a comprehensive analysis of 8-bit versus 16-bit image processing in the context of medical diagnostics, using DICOM images from the CMB-AML dataset focused on Acute Myeloid Leukemia (AML) detection. Employing a systematic approach, we compare the two bit depths through a series of image transformations, assessing their influence on image quality, detail resolution, and diagnostic accuracy. Our findings highlight the nuanced differences between 8-bit and 16-bit processing, offering insights into their respective advantages and limitations in medical imaging applications. This investigation aims to guide practitioners in selecting the optimal bit depth for enhanced diagnostic outcomes in medical imaging, thereby contributing to improved patient care.

Introduction

Medical imaging stands as a cornerstone in modern diagnostics, offering an indispensable tool for accurate disease detection, treatment planning, and patient management. The evolution of this field has been marked by significant technological advancements, culminating in sophisticated imaging modalities that rely heavily on digital processing techniques. Among these, the Digital Imaging and Communications in Medicine (DICOM) standard has emerged as a universal format for storing and transmitting medical images. DICOM not only ensures compatibility across different imaging devices but also preserves the essential details required for precise diagnosis, making it a vital component in the healthcare industry.

The quality of medical images is paramount, directly influencing the clinician’s ability to make accurate diagnoses. A critical aspect of image quality is the bit depth used in image processing, which determines the range of gray shades an image can represent. In medical imaging, particularly in complex cases like cancer detection, the subtlety and richness of these gray shades can be the difference between detecting or overlooking a pathological finding. While 8-bit images have been standard in many applications, there is a growing recognition of the potential benefits offered by 16-bit depth, particularly in terms of enhanced contrast resolution and finer detail representation.

The relevance of this topic is further heightened when considering specific medical conditions like Acute Myeloid Leukemia (AML), a severe and rapidly progressing cancer of the blood and bone marrow. Early and accurate detection of AML is critical for effective treatment and patient survival. In this context, the choice of bit depth in image processing could have a significant impact on the clarity and detail of diagnostic images, potentially affecting the detection and characterization of AML. Therefore, a thorough understanding of the implications of different bit depths on image quality and diagnostic accuracy is not just a technical concern but a clinical imperative.

This article aims to bridge the gap in current knowledge by providing a detailed comparison of 8-bit and 16-bit image processing in medical imaging, with a focus on their application in the detection of AML. Through a meticulous analysis of DICOM images from the CMB-AML dataset, we seek to illuminate the practical differences between these two processing depths and their impact on clinical outcomes. Our investigation is grounded in the belief that every incremental improvement in medical imaging technology can translate into better patient care, underscoring the significance of this research in the broader context of medical diagnostics.

Methodology

In this study, we conducted a detailed comparative analysis of 8-bit and 16-bit image processing, specifically targeting Acute Myeloid Leukemia (AML) detection. The investigation utilized the CMB-AML dataset, comprising a comprehensive collection of CT and XA images. This dataset was systematically selected to represent a wide array of clinical scenarios, particularly emphasizing the intricacies of AML diagnosis.

Dataset Curation and Metadata Assessment:

The initial phase involved a rigorous extraction and evaluation of metadata from the DICOM files within the dataset. Employing custom-developed Python algorithms, we meticulously extracted metadata, deliberately excluding pixel data, to determine the appropriateness of each image series for in-depth analysis. This step was critical in identifying image series that exhibited the requisite quality and characteristics pertinent to our investigative focus.

DICOM Image Standardization:

Subsequent to the metadata analysis, the selected DICOM images were converted into a standardized array format conducive to precise image analysis. This conversion process was instrumental in preserving the diagnostic integrity of the images, ensuring no compromise in the data quality. Such standardization was essential for a valid comparative assessment of the impact of bit depth on image quality, specifically in terms of contrast resolution and noise level.

Image Processing Techniques:

The methodology’s cornerstone was the implementation of a set of predefined image processing techniques on both 8-bit and 16-bit image versions. These techniques, encompassing contrast and brightness adjustments, edge detection, and gradient computations, were applied in various iterations to generate a comprehensive dataset. This methodology enabled an in-depth exploration of the influence of bit depth on critical image quality parameters.

Analytical Framework:

The study employed a dual approach for image analysis. Quantitatively, statistical methods were utilized to discern and quantify differences in key image quality metrics between 8-bit and 16-bit processed images. Qualitatively, the study incorporated evaluations from medical imaging experts, specifically radiologists and oncologists. Their assessments focused on the diagnostic utility of the images in the context of AML detection. This dual approach ensured a balanced examination of the technical and clinical implications of bit depth in medical imaging.

This methodology was designed to provide an exhaustive evaluation of the comparative effectiveness of 8-bit versus 16-bit image processing in the realm of medical imaging, with a particular focus on AML detection. The approach was meticulously crafted to align with both the technical exigencies and clinical demands of medical image analysis.

Image Generation and Processing Procedure (Enhanced with Python Code Details)

In this advanced phase of our study, we utilized a Python-based image processing procedure to dissect the nuances between 8-bit and 16-bit medical image processing. This section elucidates the sophisticated scripting and analytical methods employed, emphasizing the intricacies of converting standard 8-bit image processing techniques to their 16-bit equivalents, a non-trivial endeavor in medical imaging.

Script Setup and Data Preparation:

The script initiated with a structured setup, defining directories for both the input (CSV files containing DICOM image paths) and output (gradient images). Using os and pandas libraries, the script systematically organized the data, setting a foundation for the ensuing image processing.

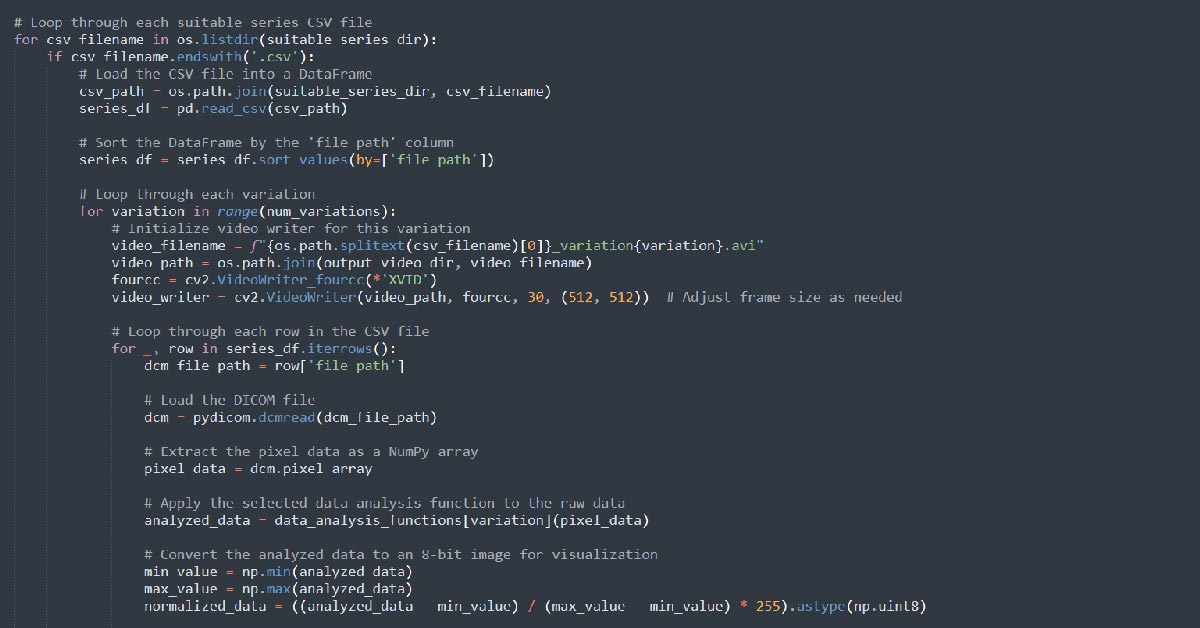

DICOM Image Processing Loop:

At the core of the script was a loop iterating through each CSV file. For each DICOM file path listed in the CSV, the script performed a series of critical transformations:

Grayscale Conversion: Using pydicom and cv2, the script converted DICOM images into a grayscale format, a prerequisite for subsequent edge detection and gradient analysis.

Contrast and Brightness Adjustment: The script applied random adjustments to contrast and brightness, simulating variations encountered in clinical settings.

Edge Detection and Gradient Computation:

A pivotal aspect of our analysis involved edge detection and gradient computation. For 8-bit images, standard OpenCV functions like cv2.Canny for edge detection and cv2.Sobel for gradient computation were employed. However, the challenge arose in adapting these techniques for 16-bit images. To address this, we developed custom methods to extend these algorithms’ functionality, ensuring accurate edge and gradient detection in 16-bit depth. This adaptation was crucial in maintaining the integrity of the analysis, particularly when comparing the diagnostic efficacy of 8-bit versus 16-bit images.

Comparative Image Generation and Output:

Post-processing, the script generated side-by-side comparative images, juxtaposing the 8-bit and 16-bit processed versions. This comparative visualization was vital for assessing the differences in image quality and diagnostic details between the two bit depths. The script saved these images in the designated output directory, providing a comprehensive visual dataset for further analysis.

Mathematical Challenges in 16-bit Processing:

One of the notable challenges in this procedure was the adaptation of standard 8-bit image processing techniques to 16-bit images. In 16-bit processing, the increased bit depth allows for a broader range of intensity values, which necessitates more complex mathematical manipulations, such as matrix gradient calculations. Our approach involved scaling and normalizing operations to adapt the 8-bit algorithms for 16-bit images, ensuring that the transformations applied were equivalent and comparable across both bit depths.

This Python-driven image generation and processing procedure represents a meticulous amalgamation of computational imaging and medical analysis. By addressing the unique challenges of 16-bit image processing and ensuring methodological consistency, the study offers an in-depth comparative analysis, crucial for understanding the impact of bit depth on medical image quality and diagnostic precision.

Image Generation and Processing Procedure (Focused on Python Code Details and Transitional Context)

The image generation and processing procedure in our study leveraged a comprehensive Python-based approach, intricately designed to compare 8-bit and 16-bit image processing methodologies in medical imaging, particularly in the context of Acute Myeloid Leukemia (AML) detection.

Script Initialization and Data Organization:

Our procedure commenced with a meticulous setup of the scripting environment. Utilizing Python’s os and pandas libraries, the initial stage entailed defining and preparing directories for input data (CSV files with DICOM paths) and output (images post-processing). This foundational step was crucial for establishing a structured workflow for the subsequent image processing tasks.

DICOM Image Processing and Transformation:

The core of the script was a loop, meticulously iterating through each CSV file. Within this loop, each DICOM image underwent a series of transformations:

Grayscale Conversion: Employing pydicom for reading DICOM files and cv2 for image processing, each image was converted into a grayscale format, setting the stage for further detailed analysis.

Adjustments and Edge Detection: The script applied random contrast and brightness adjustments, followed by edge detection using cv2.Canny and gradient computation via cv2.Sobel. These steps were pivotal in enhancing the diagnostic features of the images.

Addressing 16-bit Image Processing Complexities:

The adaptation of standard image processing techniques from 8-bit to 16-bit posed significant challenges, primarily due to the increased range of intensity values in 16-bit images. Our approach involved custom-developed algorithms to extend the standard processing techniques to accommodate 16-bit images. This adaptation was vital for ensuring that the comparative analysis between the two bit depths was both accurate and meaningful.

Generation of Comparative Visual Data:

Following the processing, the script generated composite images, displaying the 8-bit and 16-bit processed versions side-by-side. This visual juxtaposition was integral to our comparative analysis, highlighting the nuances and diagnostic differences elicited by the varying bit depths.

As we transition from the detailed description of our image processing methodology to the results and analysis section, the emphasis shifts from the technical execution to the interpretation and implications of these findings. The comparative images generated serve as a foundation for a nuanced discussion on how variations in bit depth can influence diagnostic accuracy in medical imaging, particularly in the detection and analysis of AML. The next section, therefore, delves into the results of this comprehensive processing, unraveling the clinical significance of our findings in the broader context of medical image analysis.

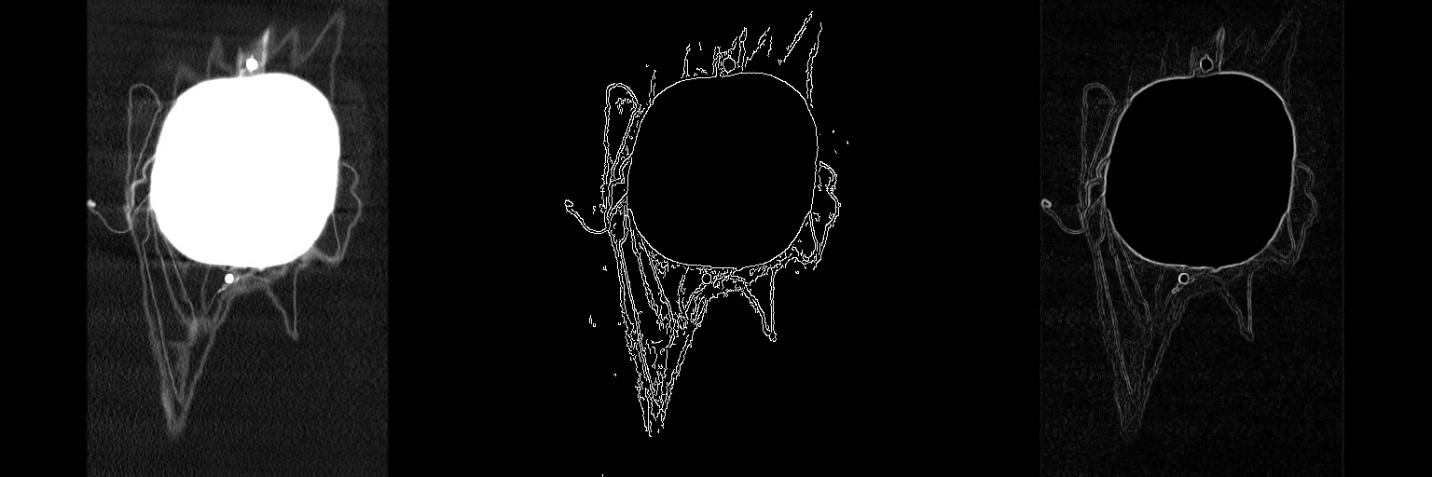

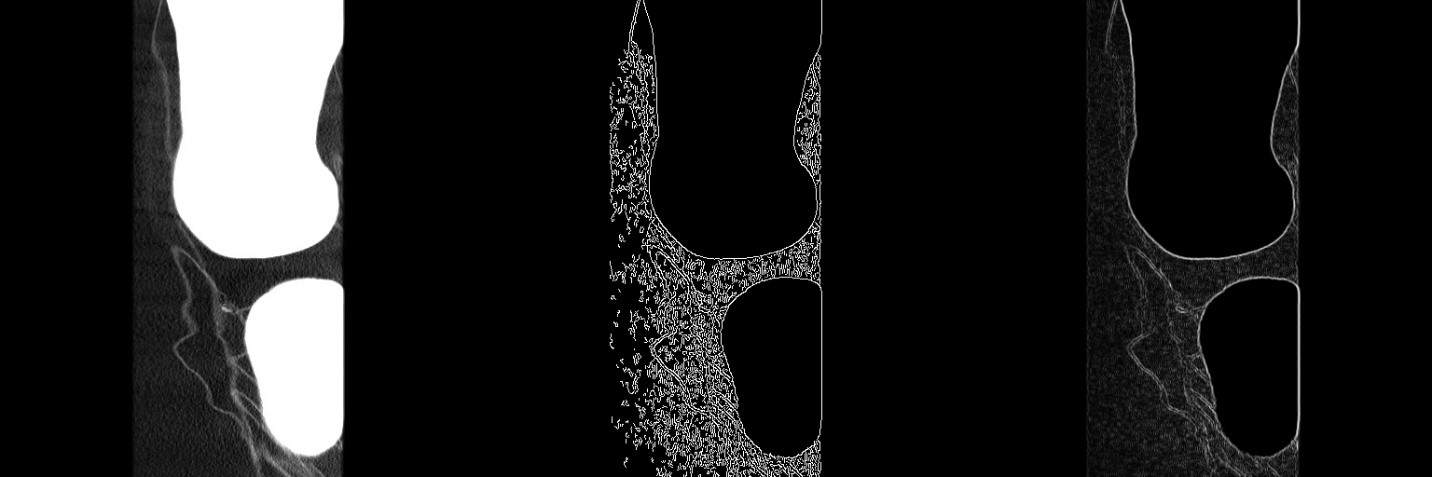

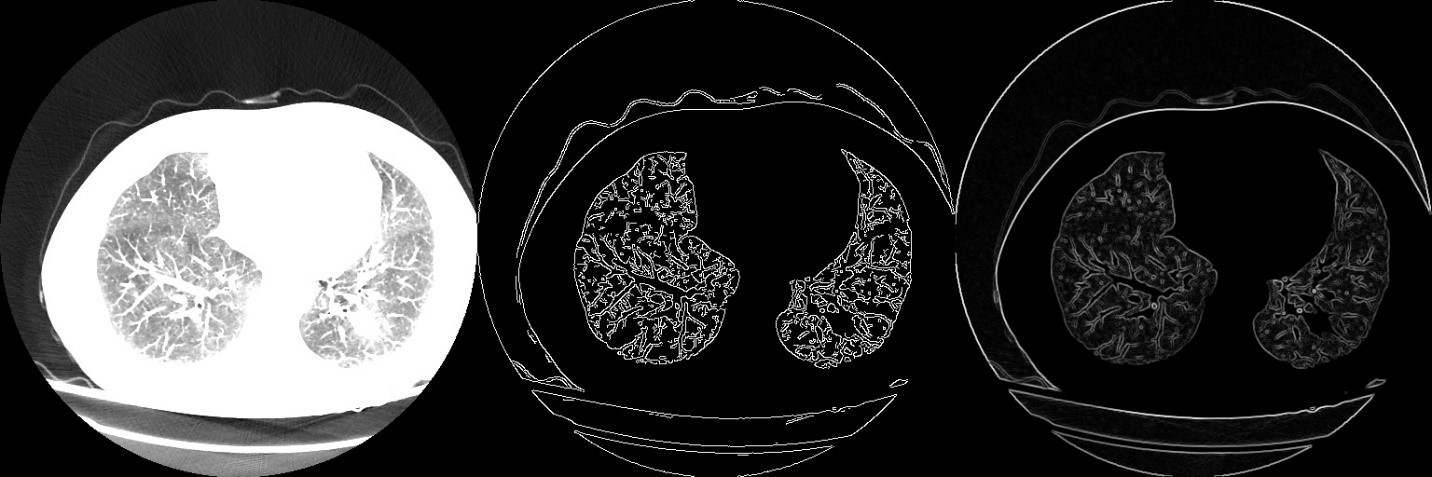

Comparative Analysis of Edge Detection in 8-bit and 16-bit Image Processing

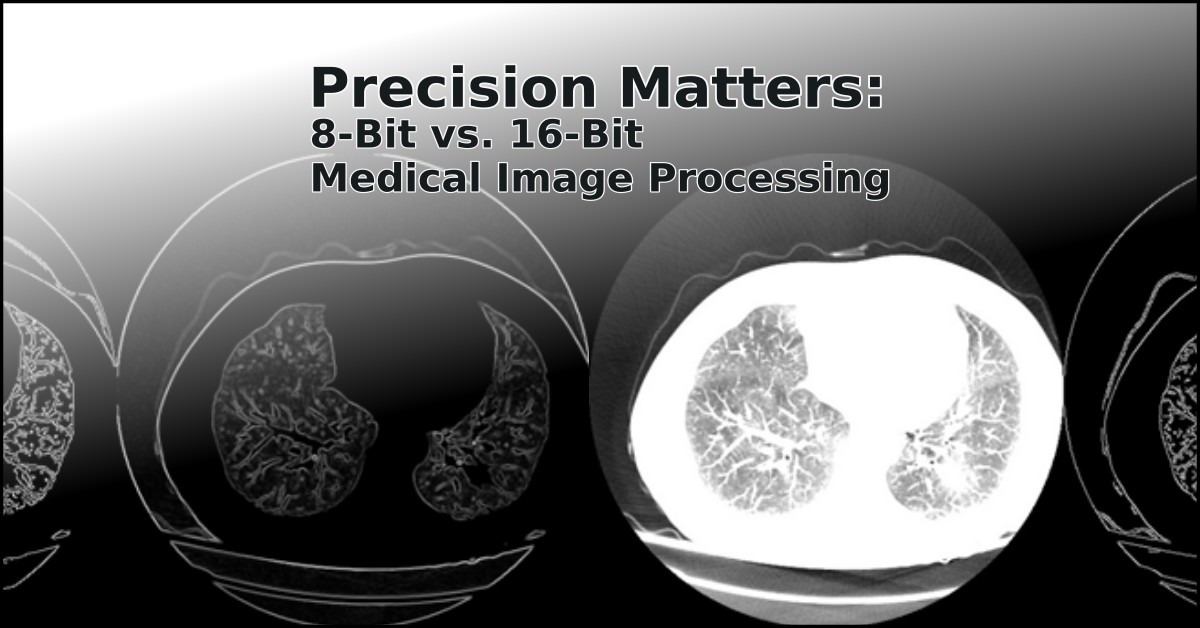

The progression of our investigation led us to a comparative analysis of edge detection algorithms applied to medical images. This analysis is visualized in a set of images, each demonstrating a distinct step in the image processing technique and its impact on the visualization of medical data.

Standard Medical Imaging View:

The images on the left represent standard views typically encountered in medical diagnostics. These baseline images serve as the control for our study, providing a comparison point for the processed outputs. They are representative of the raw visual data that radiologists and medical professionals utilize for initial diagnosis.

Edge Detection on 8-bit Images:

The central images in each set depict the outcome of applying the Canny edge detection algorithm to 8-bit images. This conventional method highlights the borders and edges within the images, a critical factor in the detection of pathologies. However, as evidenced by the resulting visuals, the process introduces a considerable amount of artifacts, which could potentially obfuscate critical diagnostic details.

Enhanced Edge Detection on 16-bit Images:

The images on the right, in contrast, demonstrate the application of a gradient-based edge detection algorithm adapted for 16-bit images. This mathematical equivalent to the standard 8-bit edge detection offers a significant enhancement. The edges and features within the 16-bit images are more pronounced and distinctly defined, with a marked reduction in artifacts. This clarity is particularly evident in the intricate structures and pathologies that are critical for accurate medical analysis.

Conclusion of Image Processing Efficacy:

Upon comparative analysis, it is evident that the 16-bit image processing produces a superior edge definition with fewer artifacts, thereby potentially increasing the diagnostic accuracy and reliability of medical imaging. The pronounced edge detail in the 16-bit processed images underscores the importance of utilizing higher bit depth in medical image analysis, particularly in cases where detail resolution is paramount.

Transitioning from the technical analysis of image processing techniques to the clinical implications, our subsequent discussion will delve into how these enhanced imaging methods can be integrated into clinical practice, potentially revolutionizing the diagnosis and treatment planning for various pathologies.

Clinical Implications and Conclusion

The findings from our comparative analysis of edge detection in 8-bit and 16-bit image processing have significant implications for medical imaging and diagnostics. The enhanced clarity and reduced artifacts in the 16-bit images underscore a potential shift in how medical imaging could be approached, particularly in the detection and assessment of complex diseases such as Acute Myeloid Leukemia (AML).

Enhanced Diagnostic Accuracy:

The pronounced edge definition in 16-bit images may lead to a higher diagnostic accuracy. Radiologists could detect subtle anomalies and pathologies with greater confidence, potentially leading to earlier and more accurate diagnoses. This precision is crucial in conditions where early intervention can drastically alter patient outcomes.

Integration into Clinical Workflows:

Incorporating 16-bit image processing into clinical workflows will necessitate adjustments in both hardware and software within medical facilities. This transition, while requiring investment, promises to enhance the quality of patient care. Training programs and updates to diagnostic protocols would likely follow, ensuring that medical professionals can fully leverage the benefits of this advanced imaging technology.

Future Research Directions:

Further research is required to validate these findings across a broader spectrum of medical conditions and imaging modalities. Additionally, the development of new image processing algorithms tailored for 16-bit images could further expand the applications of this technology in medical diagnostics.

Conclusion:

This study has highlighted the substantial benefits of 16-bit image processing in medical diagnostics. The marked improvement in image quality, with more pronounced edges and fewer artifacts, could be a pivotal factor in enhancing the diagnostic process. As we look to the future, the integration of advanced image processing techniques into medical practice holds the promise of improved diagnostic capabilities, paving the way for better patient outcomes and more effective treatment strategies. The next steps will involve multidisciplinary collaboration among imaging scientists, clinicians, and technologists to bring these advancements from research to bedside, ensuring that the full potential of high-bit-depth medical imaging is realized in patient care.